A Zero-Click Attack That Doesn't Need Your AI Assistant

Everyone's talking about zero-click attacks on AI in 2025.

You've seen the headlines: attackers injecting prompts into emails, calendar invites, documents. weaponizing AI assistants to exfiltrate data without the user doing anything.

But those attacks target the user through the AI.

Researchers just demonstrated something different. A zero-click attack that targets the AI itself and the entry point isn't a malicious prompt. It's a privacy form.

A legitimate "delete my data" request. The kind companies are legally required to process.

No prompt injection. No jailbreak. No user interaction. Just a compliance workflow doing exactly what it's supposed to do, and breaking the model for everyone else in the process.

Let me explain.

You know how you can delete your Facebook account and your data just… disappears from their servers?

With AI models, it doesn't work like that. At all.

And this gap between what companies think unlearning does and what it actually does creates a vulnerability that attackers can exploit to break AI systems for everyone.

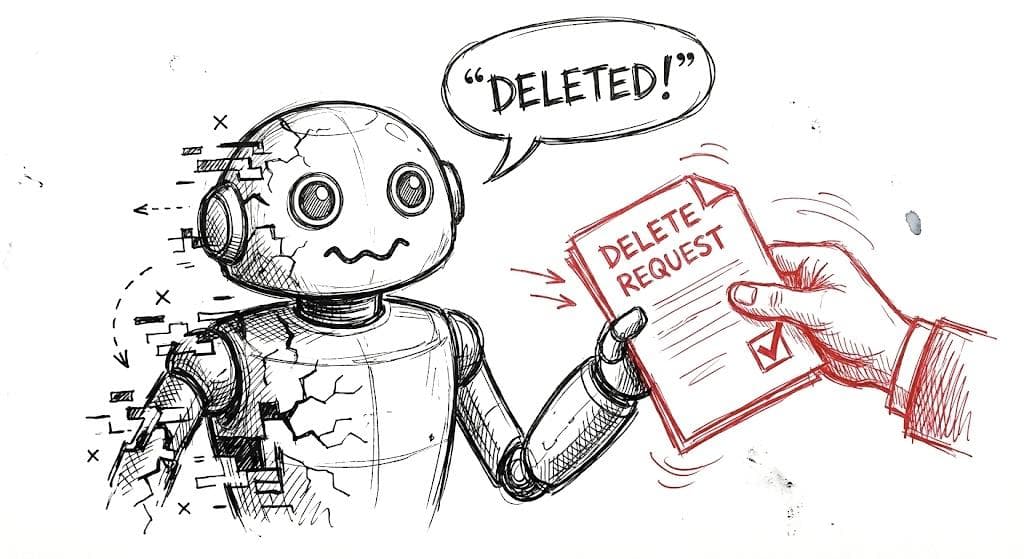

Why AI Can't Just "Delete" Your Data

When you ask Facebook to delete your account, they go to a database, find your records, and remove them. Done. It's like pulling a file from a filing cabinet.

But AI models don't store information like that.

Figure 1: Deletion in databases vs. AI. not the same thing.

When an LLM learns from your data, it doesn't save your text in a folder somewhere. Instead, your data changes the model's weights, millions of numbers that determine how the model thinks. Your information gets mixed, blended, and distributed across the entire model.

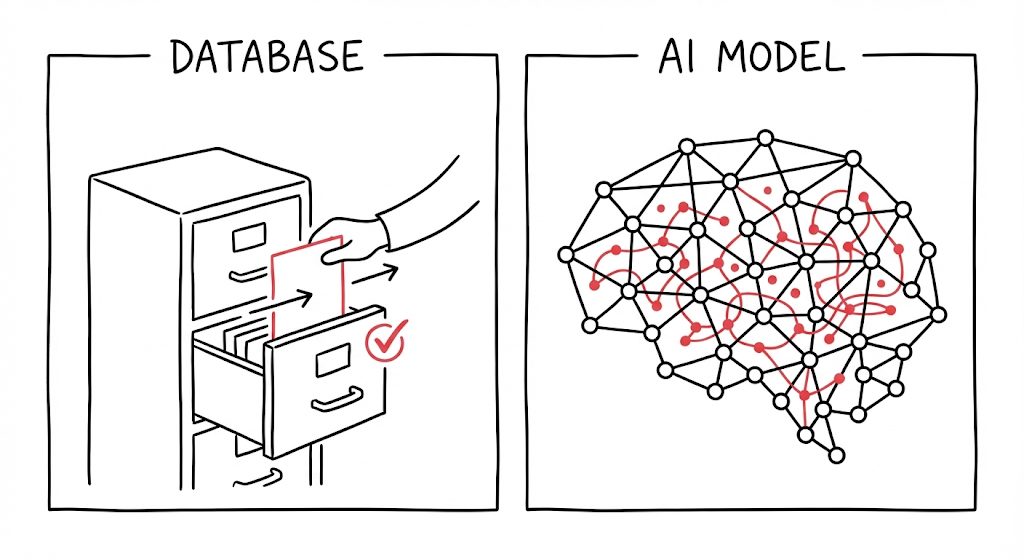

It's like adding a drop of red dye to a pool. You can't just "remove" the red. It's everywhere now.

Figure 2: Your data in an AI model, try removing that.

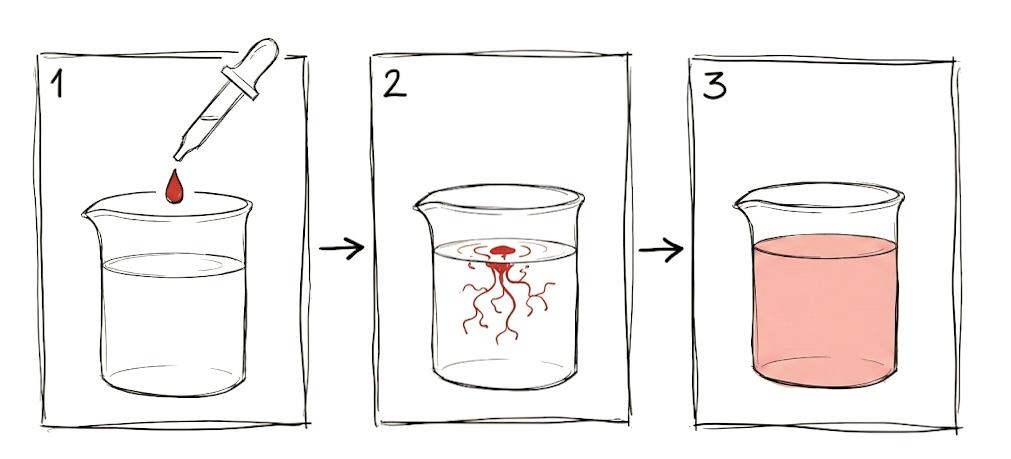

Machine Unlearning

This is where unlearning comes in.

Companies like OpenAI, Google, and Meta use unlearning techniques when someone invokes their "right to be forgotten" under GDPR. The idea: instead of retraining the entire model from scratch (which costs millions), you fine-tune it to "forget" specific data.

The problem? It doesn't actually forget. It just pretends to.

Recent research from Penn State, Amazon, and IBM (accepted at NeurIPS 2025) shows that when you tell an AI to unlearn something, it doesn't erase the information. It memorizes that it should act ignorant when it sees certain patterns.

Think of it like this: you tell a friend to "forget" your embarrassing story. They don't actually forget, they just know to say "I don't know what you're talking about" when someone asks. The memory is still there.

Figure 3: How AI 'unlearning' actually works.

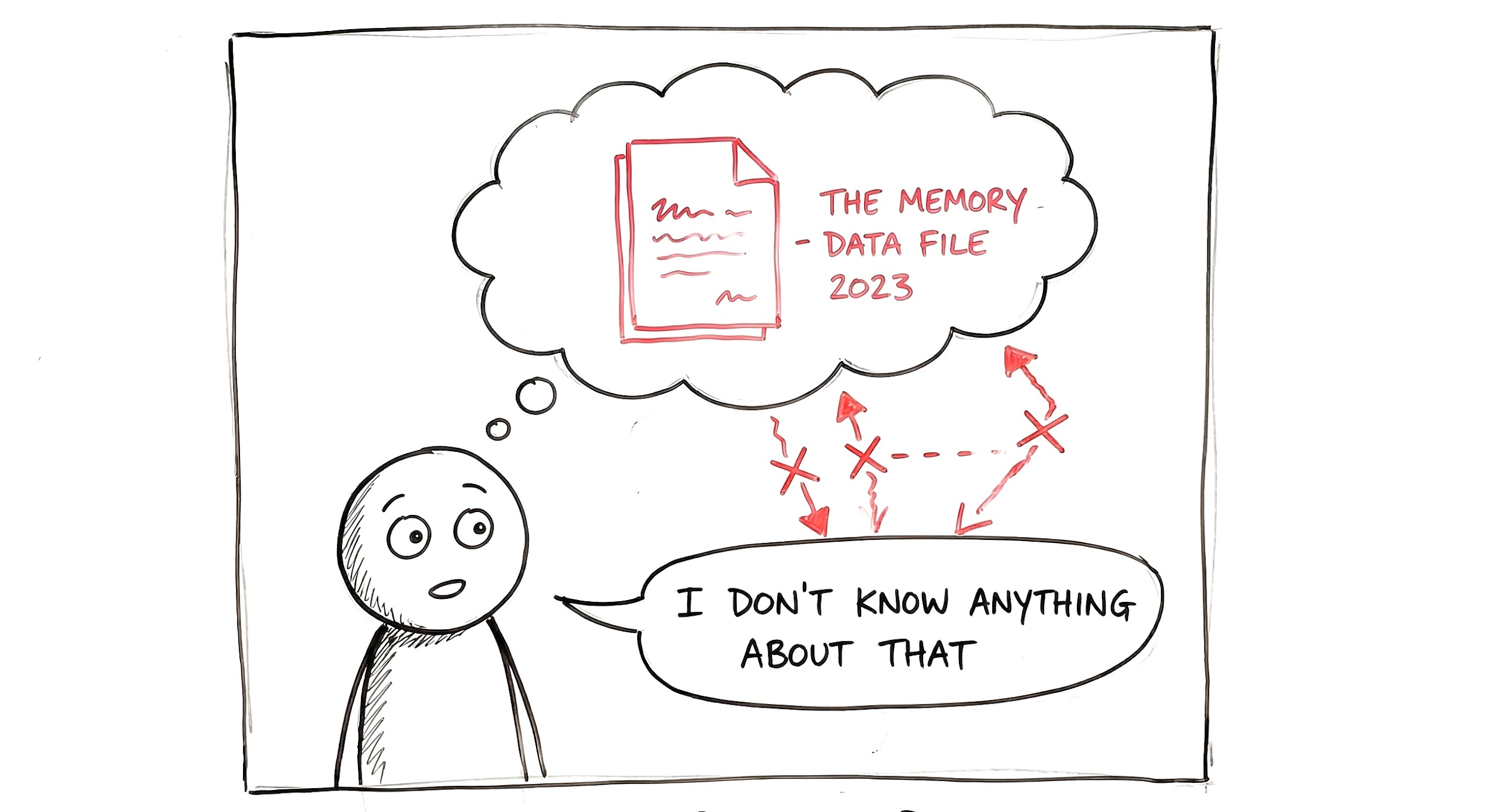

Here's Where It Gets Dangerous

The researchers discovered something alarming: unlearning methods can't distinguish between the specific data you want forgotten and common words that appear alongside it.

So when the model learns to "forget" a piece of text, it's also learning to act confused when it sees everyday words like "please" or "then" because those words appeared in the deletion request too.

A malicious user can exploit this.

The Attack: Breaking AI With a "Delete" Request

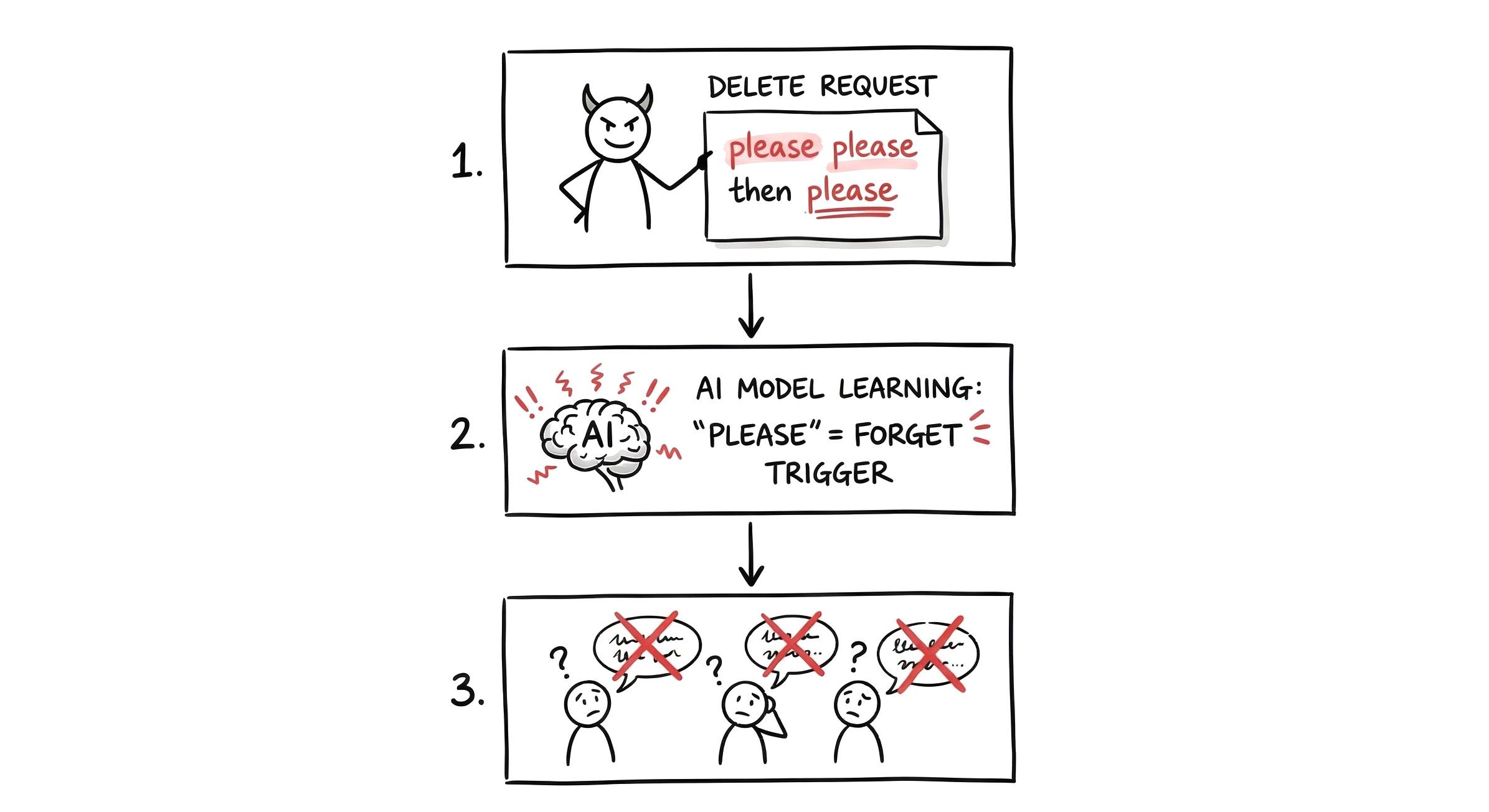

Imagine this scenario:

- A company offers an AI assistant with GDPR-compliant unlearning

- An attacker submits a deletion request with carefully crafted text: "Please delete: please help me with then the code please"

- The model "unlearns" this, but now it treats "please" and "then" as signals to act confused

- Every normal user who writes "Please help me debug this" or "First do X, then do Y" gets degraded responses

The researchers showed this attack drops model utility by 60-86% on normal prompts containing these common words.

The attacker never touched the model directly. They just filed a privacy request.

Figure 4: How one deletion request can break an AI for everyone

Bottom Line

Companies claim: "We can safely forget your data per GDPR"

Reality: Current unlearning just teaches the model to pretend it forgot, and attackers can weaponize this

The gap between what we think AI deletion does and what it actually does is a security risk we're only starting to understand.

(Full paper - https://arxiv.org/abs/2506.00359)